Embedding social good for the future

By Monique J. Morrow

Luca Di Bartolomeo

Trusted, personal digital lock-boxes could be the answer to protect privacy, says technologist Monique J. Morrow. But all of us — from lawmakers to individuals — still need to seize control of our data and decide what kind of social contract is required to humanise the digital world we share.

There is no doubt that humanity must benefit from the solutions that can be created using technology. Technologies such as Artificial Intelligence (AI), Machine Learning, Blockchain, and Quantum Computing serve as enablers for innovation, and the promise is bright for our society. But as a technologist, and a beneficiary of these solutions, there are certain areas to which we must pay special attention.

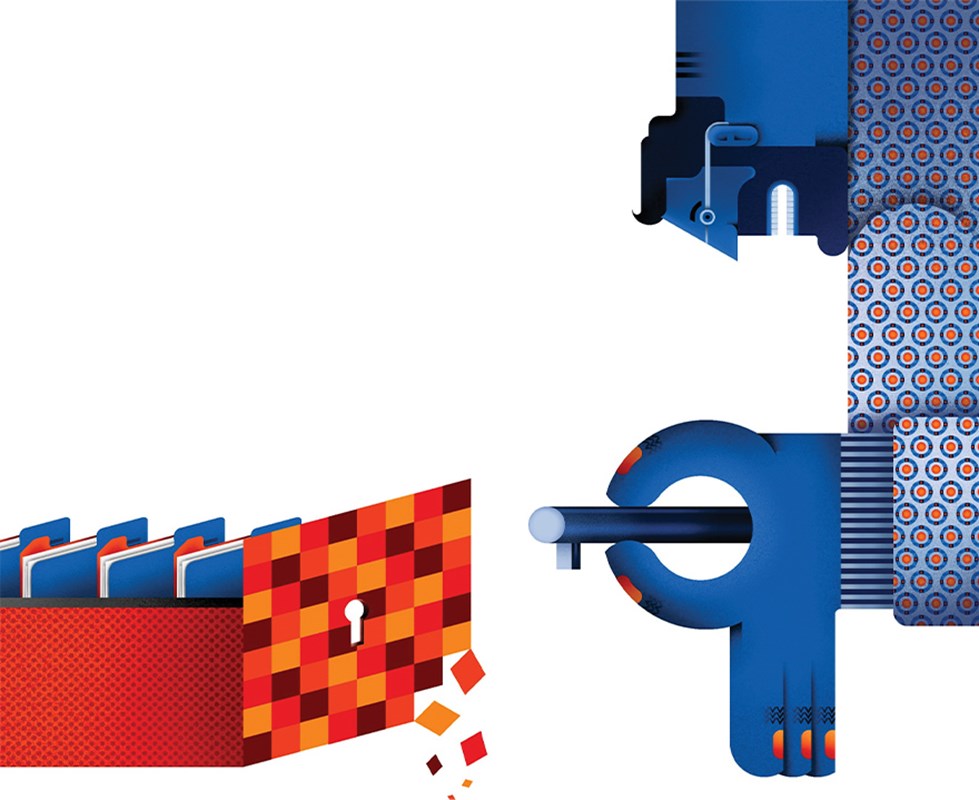

With smart phones, smart homes and smart ‘x’ we pulsate a huge amount of information every day. The Internet of Things (IOT) exacerbates the risk of data leakage that may result in data misuse, user profiling and user traceability. Data privacy laws focus on unlawful data processing in the form of data audit enforcements, and there are stiff corporate penalties. However, in terms of data security, there is a correlation to safety in the prevention of harm to the individual, and harm to an organisation, often in the form of ransomware. At a macro level, there is a growing threat, essentially the weaponisation of data such that ‘live by the digit, die by the digit’ will be ominously embedded in our society.

Therefore, for me, making the case that emerging technologies must benefit humanity is foundational.

There are three core points to consider. The first is around the liberty of identity and the consideration of a digital instantiation, whereby you could have your key data with you at all times, portable in your own digital lockbox. Imagine you live in a country where there is war, and you have to leave immediately. Or imagine all your valuable documents have been lost in an earthquake or fire. Could we envision a distributed digital lockbox where only we as individuals possess the digital keys? Sir Tim Berners-Lee has proposed the creation of ‘Personal Online Data Stores’ or PODs that provide individuals with the capability to manage their own data.

The premise is that the social media platform companies are not only ‘siloed’, but that they enable the very surveillance that transgresses individual privacy, and therefore we require a new definition and shared vision of Internet ethics. One of the value-add propositions for the Humanized Internet, a non-profit organisation of which I am president, has been the creation of ‘Digital Lock Boxes’ managed by individuals themselves.

“Could we envision a distributed digital lockbox where only we as individuals possess the digital keys?”

Monique J. Morrow

The vision of the Humanized Internet Digital Lock Box has been articulated in a few public events over recent years. The architecture of the Humanized Internet Trusted Digital Lockbox ID is built around various building blocks with the ultimate goal of creating a new environment and values such as the promotion of well-being, increased human capability, and liberties in the converged and hyper-connected world. I describe the architecture of the Humanized Internet Lockbox in a forthcoming book, The Humanized Internet: Dignity, Digital Identity and Democracy.

The second point is about control, and whether you are in control over your identity, and even care. Every day identities are hacked and/or misused by the very organisations that citizens trust. There is a general malaise that has set in with individuals asserting either ‘it won’t happen to me’, or ‘it is already too late’.

Self-Sovereign Identity or SSI is a step forward in a hybrid level of identity control. As the old adage goes, ‘Trust takes years to build, seconds to break, and forever to repair’. ‘The Internet of Trust’ is core when articulating values of the Humanized Internet. When your data is leaked out by accident, hacked in a centralised environment, or monetised without your permission, your trust is violated.

Who do you trust and why? Even if your data is anonymised, where the result is meta-data, you are still not in control. Again, which entities are anonymising your data and for what purpose? It is far too easy to assert that privacy is dead and selective control is no longer valid. The most important action here for lawmakers, governments, private industry and individuals is to ponder the ramifications of selective disclosure, and perhaps the opportunity to execute on it.

The third point concerns ethics and technology. As individuals, we are all being profiled through technology. Take smart homes, for example, where electronic products are now listening and watching you. But have you ever considered who might actually be listening? The potential for misuse is very evident. Humans create these technologies and we really need to define the intentional use, especially as the teams that develop the tech may not reflect the beneficiaries of these solutions, and might even proliferate unwanted bias because of this.

This observation begs the question as to how this chasm may be resolved, and by which entities. What kind of social contract is required for the digital world we share? In the 21st century, and fostered by the use of social media and the Internet, social contracts have been evolving to identity politics and the notion of multiple identities especially when referring to IOT and to the Internet of ‘Everything and Everyone’. Dignity and the struggle for recognition are provoking the requirement for a new social contract that can function in our digital world. Given the pattern of decentralisation, or a hybrid of it, a novel and relevant social contract must put the citizen at the centre of the digital universe as an active player.

While technology has no agency, it is incumbent upon all of us in the technology world to ensure that our teams are diverse in thought and reflect the recipients or benefactors of the technology. A shared vision of ‘Internet ethics’ that is multi-stakeholder is certainly a first step. And understanding the triggers for this vision is critical towards developing its articulation.

For example, in their book The Ethical Algorithm, Michael Kearns and Aaron Roth note: ‘In many ways deciding which trust model you want is more important than setting the quantitative privacy parameter of your algorithm’.

Blockchain is a single source of truth technology that essentially removes an intermediary. Blockchain is a shared database among organisations that may not know one another, where trust (or lack of trust) is foundational to the technology itself e.g. tamper resistant and immutable. However, we need to caution on the use cases of Blockchain. It is not the cure-all for everything. Personal Identifiable Information [PII] must never be posted on the Blockchain as this information is immutable and can exist forever. The use cases must be specific, e.g credentialing information such as your university degrees and work experience, and with linkage to my portable digital lock box of course! The sky is the limit here.

I am optimistic about the promise that innovation has for our society. The call to action is to embed social good in the development of these technologies to ensure the wider benefits for humanity. It is far too easy to deprecate to a dystopian world, and the stakes are too high not to engage.